Everyone has had the experience, staring at a browser and wondering why a particular website was taking such a long time to load. What makes websites slow can depend on several factors. Is the code efficient? Is the webserver efficient? How efficient is the storage? As a hosting provider mindful of sustainability, we are always trying to improve performance on our side, while also keeping redundancy in place as much as possible. Recently, we noticed one of our SQL servers was getting slower over time for no apparent reason, so we dug a little deeper to see how we could improve its performance.

Performance and the MySQL database

Databases are usually key in websites performance, since most of them use databases to store and retrieve data. MySQL is a widely used open-source database management system (RDBM). Among its features, it is ubiquitous, it provides native support for new technologies, it is flexible and it is quite simple to use and get it running. It offers various storage engines with different characteristics, so one can choose the engine that best suits the workload at hand. Each engine has its own settings that can be tuned to your own taste. This brings a lot of flexibility, but, at the same time, MySQL ends up having a large amount of different settings to be tuned.

Tuning MySQL

When tuning MySQL servers, the first thing you usually want to pay attention to are buffer pools and caches. Buffers and caches are used to improve performance by storing and retrieving frequently accessed data. They are storage components kept in main memory (Random Access Memory, shortly RAM) that provide faster data access than disks. For example, the article your are reading now, is the latest on our blog. Probably, this is currently the most requested article, so the most often requested from the servers. A smart server will cache this blog in memory, so every time it is requested, it will be read from memory and does not have to look on disk. Similarly, databases use different types of caches to store things such as queries, open tables, threads etcetera. Hence, anyone would usually think the bigger a cache is, the better. Surprisingly enough, this is not always the case, and it should be kept in mind that caching also introduces some overhead. Apart from providing quick access to data, caches also need to invalidate outdated entries and remove entries in an atomic way when the cache gets full. This involves an overhead that can downgrade performance if the cache is not exploited and/or if modifications in the cache aren't performed efficiently.

Pinpointing the problem

As a start point of our research, we used the mysqltuner script to get some general advice about our current configuration. As expected, it suggested we increased our buffers and caches sizes, specifically, the query cache size. The query cache retrieves queries that exactly match prior queries. Initially this sounds like a good idea. However, looking at how query cache works, we discovered a trade off between caching queries and the overhead of providing atomic access and invalidating entries in the cache. This cache holds a single lock to perform changes and invalidations in a coarse grain, which is not as efficient as it could be. This can end up causing contention problems as well as stalls, which, unfortunately, was the case with our SQL server.

What happened after disabling the cache

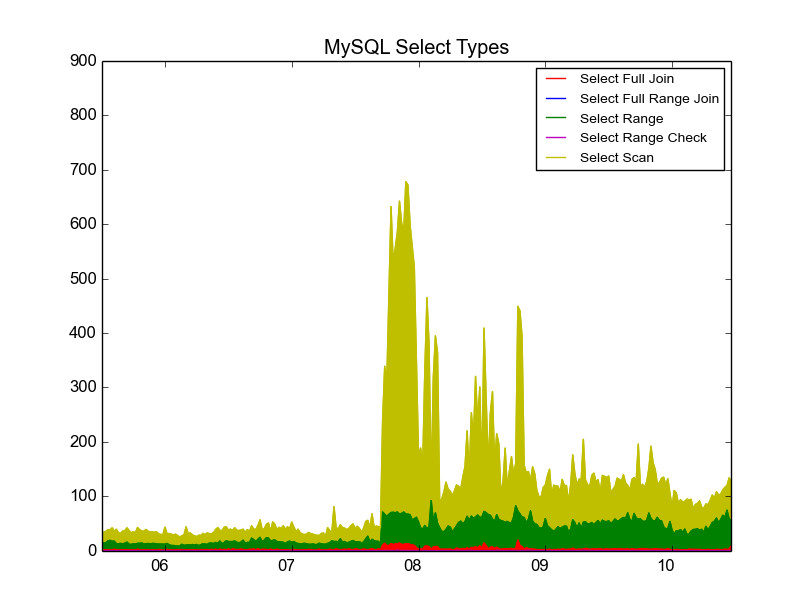

As soon as we disabled the cache, we could see the usage of some MySQL parameters started to increase. We were no longer hitting the query cache. As an example, on this first graph, we can see how select queries drastically grew once the query cache was turned off (on the 7th evening).

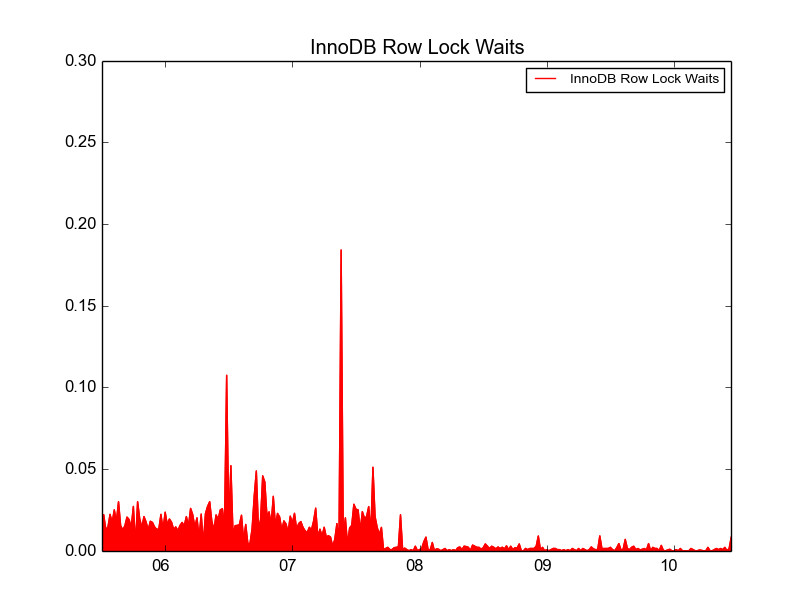

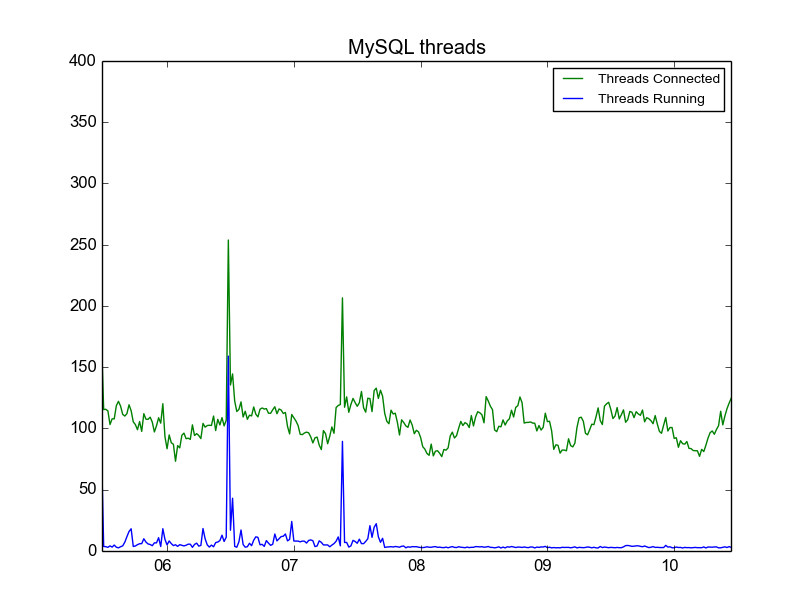

Yet, we also saw latency time dropped, increasing our response time. The InnoDB Row Lock Waits graph shows the number of times (in milliseconds) that InnoDB has waited to grant row locks. By reducing the lock waits, requests were served faster. This is also reflected in the MySQL threads graph. The number of running threads significantly decreases since they attend requests quicker.

New equipment and outdated query caching

In the old days, servers were single core with limited amount of memory. Nowadays, we have multicore servers with higher amounts of RAM, and, in our case, faster storage with SSD devices. Query cache was designed in the past and hasn't been adapted for new architectures. In this case, operating system caches and SSD devices seem to work better than query cache.

So, what's next?

Currently, we are investigating if we should apply this setting to other servers. Every server has a particular workload and should be considered individually. Besides that, we are also researching if cache solutions can help improving performance. Redis for distributed caching, APC/APCu (we allow both), or the use of memcache (an external cache solution) could still allow us to cache some queries while providing good performance.